Wednesday, 28 December 2022

The Fully Credentialed Data Science Professional

Monday, 26 December 2022

Sitting Down with John Linford- Security & OTTF Forum Director, The Open Group

Please can you tell us how long you have been in your role and what you enjoy most about it?

What has your journey with The Open Group been like so far?

Are there any new updates within your Forum that you can share?

Can you tell us about any exciting updates planned within your Forum?

What are you most looking forward to for the year ahead?

Is there any advice you can give for those looking to start in the industry?

If you could give advice to your respective self before starting in your role, what would it be?

Friday, 15 July 2022

The Open FAIR™ Body of Knowledge: Gaining Awareness and Adoption Internationally

Open FAIR has seen rapid and extensive adoption in the US, where it has become the defacto standard for quantifying cybersecurity risk. We at The Open Group are encouraged that Open FAIR awareness and adoption are also increasing globally, and we’ve also seen some increased usage outside of the traditional IT risk quantification area. Some interesting recent developments on increased Open FAIR use and adoption outside of the US, and outside of the IT area include:

1- A recent standard published by the Central European Committee for Standardization (CEN), EN 17748-1:2022 Foundational Body of Knowledge for the ICT Profession (ICT BoK) – Part 1: Body of Knowledge, referenced Open FAIR (among other standards of The Open Group) as an informative reference standard for risk analysis. This is an important development, as it brings Open FAIR exposure to the attention of enterprises in Europe as a risk quantification method. CEN is a collaborating standards body with ISO (for the Central Europe area), as is The Open Group (we are PAS submitters to ISO, enabling fast track adoption of Open Group standards by the International Standards Organization).

2- At the recent Open Group Brazil Security Web Event, we heard several presentations that described the use of Open FAIR in risk quantification, and the presentations were greeted with enthusiasm by the Brazil attendees. One of the presentations was from Modulo, a leading IT-GRC company, and a member of the Security Forum who were kind enough to volunteer efforts to translate the Open FAIR standards into Brazilian Portugese, paving the way for further adoption in Brazil and Portugal.

3- Among the many people certified to Open FAIR, we now have 29% of the total population from outside the US, which is another sign of interest internationally. The percentage from outside of the US has grown considerably, with many countries with Open FAIR certified people now represented, including significant numbers of certified people from Australia, Brazil, Belgium, Canada, Denmark, France, Germany, India, Ireland, Italy, Netherlands, Peru, Singapore, South Africa, Spain, Sweden, Switzerland, and the UK.

4- Another indicator of international interest is the recent growth of Open FAIR commercial license holders based outside of the US. Here, 48% of the current twenty-five commercial licensees are based outside of the US. This tells us that the commercial interest in Open FAIR is growing for trainers, consultants, and software tool providers outside of the US.

5- Finally, as regards Open FAIR use outside of the traditional IT risk quantification use cases, we’re seeing more adoption and use in Operational Technology areas including for OT risk quantification in critical infrastructure including oil and gas. With the increased regulatory focus on cybersecurity risk, our Security Forum has also recently released a paper/papers on vetting cyber risk models, and the use of the Open FAIR models to calculate reserves for cybersecurity risk. (see: https://publications.opengroup.org/security-library/w221)

The Open Group Security Forum is actively working to update the materials that support and complement the Open FAIR Body of Knowledge, ensuring consistency in the guidance provided. This work includes publishing the Open FAIR Risk Analysis Example Guide in July of 2021, updating the Open FAIR Risk Analysis Process Guide (in progress) to ensure alignment with the standards, and developing a guide on the mathematics implicit in the Open FAIR methodology (in progress), as well as updating the Open FAIR Certification Program materials, such as the Introduction to the Open FAIR Body of Knowledge White Paper (in progress). Additional recent publications include the Calculating Reserves for Cyber Risk White Paper series, which emphasizes the use of Open FAIR in communicating to financial institutions how cyber risk can be quantified in economic terms as well as to calculate reserve requirements.

Source: opengroup.org

Monday, 27 June 2022

Zira, The Dutch Hospital Reference Architecture, A Tool To Address A Worldwide Need

A Standard Frame of Reference for Hospitals

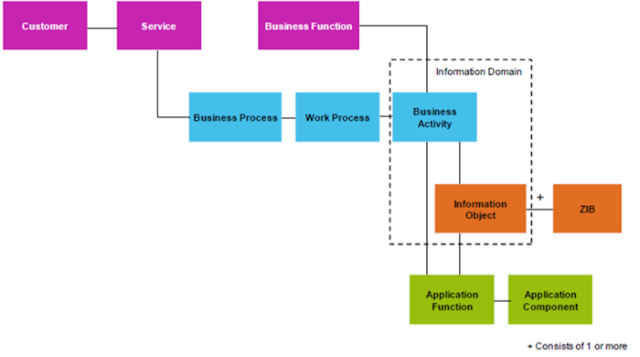

The purpose of this blog is to introduce one such Dutch healthcare innovation—known as ZiRA—to a broad audience of English-speaking architects. In Dutch, a hospital is a “Ziekenhuis,” thus ZiRA means a “hospital reference architecture (RA).” Specifically, it is a set of interlocking components (templates, models, and downloadable files) that provide architects, managers, and high-level decision-makers tools they can use to a) understand and describe the current state of their hospital and b) transform virtually any aspects of their business to achieve desired states. The ZiRA can help users accomplish necessary, mission-critical objectives, including constantly evolving to provide high-quality health services, to improve patient outcomes, to enhance patient experience, and, generally, to operate efficiently and effectively.

Read More: The Open Group TOGAF 9 Part 2 (OG0-092)

The Power of Public and Private Partnerships

ZiRA is a product of Nictiz, the Dutch competence center for electronic exchange of health and care information. Nictiz is an independent foundation that is funded almost entirely by Holland’s Ministry of Health, Welfare, and Sport. For over a decade, Nictiz has encouraged ZiRA adoption by facilitating the establishment of collaboratives such as iHospital, a group composed of and led by key stakeholders from hospitals and related stakeholders across the Netherlands.

Bringing ZIRA to a Broader Audience

Heretofore, ZiRA has been available in Dutch only. It stands to reason that this fact alone has precluded its broader adoption. Through the efforts of The Open Group Healthcare Forum (HCF) in collaboration with Nictiz and the ZiRA Governance Board, a complete English translation and clarification is underway. As of June 23, 2022, the first of two parts, entitled Hospital Refence Architecture. Understanding and Using the Dutch Ziekenhuis Referentie Architectuur (ZiRA), Part 1 is available at no charge in The Open Group Library here. In the Preface to this White Paper, the HCF discusses how Enterprise Architecture can help hospitals deliver higher value to patients and increase their functional efficiency.

Relating ZiRA to The Open Group Healthcare Enterprise Reference Architecture

The Open Group O-HERA™ standard, an industry standard healthcare reference architecture, provides a high-level conceptual framework that is relevant to all key stakeholders across all healthcare domains. Thus, the O-HERA standard is presented at a higher level of abstraction, whereas ZiRA is tailored to address specific needs and objectives of hospitals. The O-HERA standard makes it possible to create a crosswalk between the principles and objects modeled in ZiRA (primarily focused on the Architecture Model) with a variety of other emerging and possible less mature healthcare reference models worldwide.

10,000 Foot View: Applying Reference Architectures to the Health Enterprise Level

In 2018, The Open Group published the O-HERA Snapshot. This resource provides a cognitive map and conceptual guide that helps healthcare professionals consistently define their enterprise architectures for the benefit of effectively aligning information technology and other resources to solve business problems.

Read More: The Open Group TOGAF 9 Part 1 (OG0-091)

As depicted in Figure 1 below, the O-HERA is based on the conventional “plan-build-run” concepts gainfully employed by many industries for decades. In the “PLAN” phase (or “management model”), the organization focuses on vision, mission, strategy, capability, and transformational outcomes. In the “BUILD” phase (or “management model”), the organization addresses processes, information, applications, and technologies. Finally, the “RUN” phase (consistent with an “operations model”) emphasizes operations, measurement, analysis, and evolution. Security, ever so essential to the effective exchange of healthcare information, pervades the entire model. As demonstrated in the center of the diagram, the O-HERA standard is based on agility, a person-centric focus, and a strong preference for modular solutions.

The Vital Importance of Industry Standards

How A Reference Architecture Benefits Communication

A ZIRA Use Case: Interoperability

Monday, 6 June 2022

The IT4IT™ Standard Streamlines Plug-and-Play Adoption of Business Tools

Imagine a technology implementation strategy that works harmoniously for both you as the customer and your suppliers. Whether you’re an Enterprise Architect, Digital Practitioner, Sponsor, or Vendor, it’s not as impossible as it seems when you’re utilizing the IT4IT™ Reference Architecture, a standard of The Open Group and a game-changing foundation for digital systems professionals.

We set out to solve the most common and most costly implementation issues, which include managing a complex landscape of different processes and tools, multi-vendor integration, automation, and end-to-end support.

For tool vendors and suppliers, the solution means enabling a plug-and-play implementation of their tools, driving the ease of adoption of their products, and ultimately increasing revenue opportunities.

For Digital Practitioners and other customers, solutions ensure that new tools can be easily plugged into a multi-vendor end-to-end tool chain in support of specific value streams or even the entire digital product delivery pipeline, improving interoperability across the ecosystem, reducing costs, avoiding outages, and enabling business process automation.

Benefits and Outcomes

Establishing the IT4IT Reference Architecture as the standard that your suppliers must meet removes lengthy discussions of “how” to integrate and makes the on-boarding and off-boarding of suppliers faster, easier, and more cost-effective on both sides.

Enterprise Architects, Digital Practitioners, Sponsors, and Vendors report increased success and satisfaction when collaborating across standardized systems and interfaces that seamlessly share data. Deploying new tools is faster, more automated, and offers better end-to-end system insights and measurements. With improved communication and support between multiple vendors, integration requires less effort and costs less to maintain, allowing vendors the opportunity for increased business.

Also, developing a clear vision of the target tool integration landscape supports both a given value stream and the entire value chain, allowing businesses greater agility when it’s time to adopt new practices and tools, e.g. DevOps, CI/CD, GitOps.

Identifying Key Challenges

Before we could identify solutions, we evaluated specific challenges from multiple stakeholder perspectives finding unanimous dissatisfaction with integration becoming expensive and cumbersome for both customers and suppliers.

Sponsors struggle with agility, unable to easily adapt to changes in business demand. Often redundancies occur across multiple tool vendors causing unnecessary cost increases and significantly reducing ROI. They also lack automated end-to-end flow of information, making it difficult to generate strategic business insight for decision-making.

Enterprise Architects waste time inefficiently integrating data across value streams with overlapping capabilities, with no industry standard common data model, and no ability to automate.

Digital Practitioners are left with unreliable, incompatible, or incomplete data, and are resistant to integrate a new supplier or tool due to the risk, complexity, and expense.

Meanwhile, the vendors seeking to offer solutions are faced with evaluating and rationalizing this messy portfolio of product offerings and are therefore not well equipped to convince a client that they can help enable their success.

Clearly all stakeholders would benefit by moving away from proprietary platforms and siloed solutions for specific processes and functions.

Implementing Solutions

Utilizing the IT4IT standard as a blueprint helps deliver a consolidated, modern, and automation-ready tool chain that accelerates flow, lowers the cost and complexity of digital product delivery, and enables an efficient exchange of data across the tooling landscape.

Customers and Digital Practitioners use the IT4IT standard to analyze and assess the current state of their tooling integration landscape, identifying gaps and overlaps in each tool’s functionality and interface, and enabling them to better understand the most effective ways to evolve their digital strategy with the needs of their business. They can improve transparency, traceability, and broad awareness of the data flows between teams and their tools, such as to existing vendors and tooling, to bottlenecks that need scaling and automation, to self-service/self-healing capability offerings, and for where Artificial Intelligence (AI) and Machine Learning (ML) could be utilized.

Vendors can offer standardized interfaces and data models in their toolsets based on the IT4IT Reference Architecture which provide for more automation and speedier delivery with more consistent, reliable information.

When decision-makers strategically engage with tool vendors that adhere to an industry standard data model, they ensure improved consistency, efficiency, and effectiveness for all stakeholders.

Source: opengroup.org

Friday, 3 June 2022

The IT4IT™ Reference Architecture is a Digital Product Blueprint for Cost Savings and Automation

How many failed and de-railed technology solution implementations has your company been through? It’s past time to reevaluate your digital transformation strategy. Become the hero of your business by implementing a standardized operational backbone that saves money, automates processes, and reduces the waste and friction in your current technology solutions to keep you moving forward ahead of your competitors efficiently and effectively.

Enterprise Architects, Digital Practitioners, Release Chain Engineers, Release Chain Managers, Digital Product Development Teams, and CIOs may relate to many of today’s most common challenges, such as when moving to the cloud, deploying Agile or DevOps, undergoing a digital transformation, or moving to a product-centric operating model. It may seem inevitable to suffer through the risk and pain of fragmentation, redundancy, insufficient structure, error-prone manual processes, and a general lack of understanding across the value chain. Reaching agility, scalability, and extensibility is possible when rationalization, standardization, and automation are the foundation of your processes.

The IT4IT™ Reference Architecture, a standard of The Open Group, is a game-changing foundation for digital systems professionals. It provides prescriptive guidance to design, source, and manage services across a full range of value chain activities. It’s a blueprint for increasing operational efficiency so that a company can deliver maximum value for the least possible cost with the most predictability. It may sound too good to be true, so we’ll explain how it’s done and why we’re so confident.

The Open Group completed case studies from a cross-section of vertical industries, including Oil and Gas, Finance and Insurance, and the Technology sector, which show how they have used the IT4IT Reference Architecture to add value to their businesses with automation projects, streamlining software and service portfolios, and transforming to more agile, digital working models.

Known Benefits

It’s been said, “an hour of planning can save you ten hours of doing,” but only when you have a proven and effective strategy.

Digital systems teams running on the IT4IT engine reap large rewards in cost savings and automation freeing up valuable resources for continuous innovation. Especially for teams that act as integrators to multiple suppliers, by having an effective “how” discussion at the start, everyone will benefit from removing costly, time-consuming, and error-prone implementations.

With IT4IT usage at the core, CIOs demonstrate that providing a consolidated, modernized, and automated tool chain which accelerates the adoption of digital transformation while reducing costs isn’t a myth. Release Chain Managers efficiently build an integrated set of tools to facilitate digital product delivery. Digital Product Development Teams seamlessly co-create a target architecture with the business. And with all this success, team members experience less stress, more job satisfaction, and are less likely to quit during an implementation which is even more valuable in today’s challenging job market.

Identifying Challenges, Analyzing Data, and Finding Solutions

Reaching this technology Utopia requires taking a hard and honest look at your current processes and systems. You might find a surprising lack of understanding of the tooling landscape, hidden potential issue areas, poor training plans for demonstrating how digital product tools are integrated with other tools, redundant capabilities, and the potential for these cost-draining issues to occur again and again as your business evolves. What Enterprise Architects, Digital Practitioners, Release Chain Engineers, Release Chain Managers, Digital Product Development Teams, and CIOs have in common is their need to demonstrate that there is effective control of the investment, in both time and money, including the ability to bring in or retire tools with little disruption.

Reliable solutions begin by using the IT4IT Standard. The multi-step process begins with rationalization by creating a structured inventory of your current tools and plotting them against their capabilities and functional components. This includes components such as the application type, estimated costs, user base, value stream and capability map, and scoring the strategic fit, value, risk, and customer satisfaction. Refer to our full case study for a value stream and capability mapping example.

The initial analysis will highlight your business’ key challenges and gaps. Often the most critical find is in multiple tools supporting the same capability which has a direct negative impact on cost and complexity. Whether redundant or not, you may also identify capabilities that are poorly supported either due to their custom-built structure or the number of manual activities required, such as in testing and deployment. Existing tools may also lack the integrations required for data flows between the data objects they host. These findings are extremely common, and many are solvable.

Once analyzed, your business will create a vision of the target tool chain based on a new target technology operating model. This starts by identifying your digital technology management strategy and vision and identifying key gaps that need addressing, including where DevOps may enable improvements in productivity and effectiveness. You’ll explore how you might enable the building blocks for innovation, agility, scalability, and extensibility, deliver self-service, and leverage new technologies such as Artificial Intelligence (AI) and Machine Learning (ML). In the process, you’ll identify existing strategic tools and vendors and opportunities for rationalizing or simplifying your tooling landscape. The result is more integrated and automated processes and support systems which accelerate the flow of work and create transparency and traceability across value streams.

Long-term Outcomes

Businesses worldwide of all sizes and industries using the IT4IT standard report that the benefits are ongoing during and after implementation. The most common long-term benefits include improved support of agile and digital transformation, improved efficiency, and improved effectiveness. Costs are reduced through fewer manual activities, removal of redundancies, and a higher degree of collaboration and information exchange. The insight gained during this process through data transparency will make your business more competitive by ensuring easier adoption of new practices and tools as your business evolves.

Experience the benefits for yourself. If you are an Enterprise Architect, Digital Practitioner, Release Chain Engineer, Release Chain Manager, a CIO, or a member of a Digital Product Development Team, contact us today to learn how tool rationalization and standardization through the IT4IT Reference Architecture can support the building and implementation of your winning strategy.

Source: opengroup.org

Tuesday, 12 April 2022

Why Enterprise Architecture as a Subject is a “Must-Have” Now More Than Ever Before?

A master’s in business administration helps students understand business dynamics better. I believe having the ability to see business as a wholesome thing is paramount in today’s era. There is a dire need for students to apply the lens of Enterprise Architect and break the silos approach to enable students to see the business as a single unit. It is pivotal to understand that enterprise doesn’t run in silos the way the subjects during our MBA might make us think. For an enterprise to run efficiently and effectively, it needs to run collaboratively, i.e., all the fundamental constituents of an enterprise need to make progress in tandem.

Read More: Open Group Certification

“An enterprise is only as strong as the weakest link.”

Let’s take an analogy of the human body. For a human body to run efficiently, it needs an intention and goal, and it requires food as fuel, sleep as refreshment and a family as a support system.

Similarly, an enterprise needs strategic vision, business alignment to that vision, applications to execute the business strategy, data to act as fuel to propel growth and technology as the underlying support to get the enterprise running at all times. When we can see phases – Business, Application, Data and Technology as a connected whole, the administration of the business is bound to succeed. Enterprise Architecture ties these phases as a single navigable unit. Hence this subject is a fantastic opportunity for MBA graduates to connect the learnings of various subjects and apply them to get the enterprise engine moving.

MBA students often appreciate and enjoy drilling down on subjects and going deep in the subject of choice. And there is nothing wrong with this because it is the essence of mastery. However, I believe there is an urgency to zoom out and see the panoramic view of enterprise to augment the deep dive. The ability to see an enterprise as a connected unit would open new frontier and emerging business models. In addition, the zoom-out view would help future architects know how various components play their part in running an enterprise effectively. With EA as a subject, students would be able to see the big picture, connect technology to business priorities and help visualize an integrated view across business units (BUs).

Enterprise architecture as a subject and knowledge of reference architecture like IT4ITTM would help EA aspirants appreciate tools for managing a digital enterprise. As students, we know that various organizations are undergoing digital transformation. But hardly do we understand where to start the journey or how to go about the digital transformation if we are left on our own. Knowledge of the TOGAF® Architecture Development Method (ADM) would be a fantastic starting point to answer the abovementioned question. The as-is assessment followed by to-be assessment (or vice versa depending on context) across business, data, application and technology could be a practical starting point. The phase “Opportunities and Solutions” would help get a roadmap of several initiatives an enterprise can choose for its digital transformation.

Enterprise Architecture as a subject in b-school would cut across various subjects and help students with a holistic view. For instance, in a business analytics course, student learns statistical modelling and make data-driven business decisions. Now with Enterprise Architecture as a subject, they will start to appreciate thoughts like:

◉ Maturity of analytics capability within the enterprise

◉ Importance of single source of truth in a multi-application environment

◉ Importance of maintaining a data catalog with all the data elements and creating matrices like application-data matrix

◉ Technology to support the business analytics application – Is it in the cloud, etc.?

The benefits of having Enterprise Architecture as a “must-have” subject are many. I am confident that MBA Graduates with the ability to see enterprise as a collective unit would benefit their organizations navigate through the changing business requirements. And while they are driving this navigation, they would be skilful with the know-how of executing changes to respond quickly enough to the changing reality of business. Hence, EA as a subject will help MBA graduates grow their enterprise in a sustainable and resilient way.

Source: opengroup.org

Monday, 21 March 2022

Architectural Data will Guide the 2020s

What technical and financial analytics should CIOs and decision makers expect from Enterprise Architects in 2022?

Enterprises are in the middle of an application explosion and a transformation acceleration.

Looking just at the application landscape: industry surveys tell us that the average enterprise is using 1,295 cloud services, and also runs around 500 custom applications. The worldwide enterprise applications market reached $241 billion last year, growing 4.1% year-over-year in 2020, according to IDC.

The underpinning architectures of enterprises– made up of interactions between people, processes and technology, and often also physical assets (IoT) – are also growing and changing at pace.

Enterprise Architects keep CIOs and business units informed using IT cost calculations and technical and lifecycle metrics.

They will often present costs and technical metrics for the current IT landscape, plus forecasts to inform planning for new business scenarios and digital transformation projects.

Common analysis in past years might have covered:

◉ Counts of applications

◉ Total cost of ownership of applications (also ROI and NPV)

◉ Which processes rely on a particular software or infrastructure (dependency analysis)

◉ How long technology is going to be supported, and when the enterprise needs to transition or upgrade

This basic data is useful but might still leave decision makers wanting additional analysis or tighter granularity.

Business units want to understand how much updated processes or applications will lead to improved technical metrics (uptime, responsiveness) or improvements to processes which are important for successful customer journeys.

Enterprise Architecture Data Analysis in 2022

Data-driven enterprise architecture can now provide greater detail and certainty around forecasts. Architects and business users need to design calculation which roll up data numerically across the architecture, generating required KPIs on-demand.

For the data scientists and numerate business analysts, steps such as Add, Subtract, Multiply, Divide, Min, Max, Average and Count are standard. In addition, operations such as Power, Log and Atan can be used to calculate trends, probabilities, attribute values and measure or predict impacts of business decisions.

As well as diagrams and roadmaps, EAs often need to be ready to provide reporting dashboards which include:

Technology Cost Analysis:

◉ On-demand totals of how much out-of-date technologies are costing the business

◉ Total cost of a specific Business Capability or Process – using the connections and relationships in the architecture to attribute portions of costs accurately

◉ More precise cost-of-ownership (e.g., calculating software, support or external services costs according to business function) Costs of underlying infrastructure or resources used. EAs can calculate the total cost of ownership of out-of-date technologies (available as a pre-built cost simulation in tools such as ABACUS from Avolution)

◉ Cloud migration costs

◉ Technical debt metrics such as remediation cost, complexity, cost of compliance

Lifecycles & Trends in Metrics

◉ Cost of risks and vulnerabilities associated to applications and technologies

◉ Technology and vendor lifecycle information summaries e.g., Number of years to retirement of a technology

◉ Application portfolio assessments: calculate and chart business fit and technical fit of applications and technologies. E.g., Are our applications using approved technologies, and are these technologies currently being supported by the vendor? (The base data for this analysis can be pulled in from sources such as Technopedia)

◉ Technical KPIs including Response Time, Availability, Reliability, Resource Utilizations

◉ Trends in metrics such as rate of growth in costs, or rate of increase in Availability or Reliability

◉ Machine-learning based predictions: E.g., Use lists of applications, lifecycles, financial data and other architectural content. For instance, the machine learning engine in ABACUS from Avolution provides a quantitative prediction of the values which belong in any empty cells. An ‘empty cell’ for an application, machine learning will propose a TIME (Tolerate-Invest-Migrate-Eliminate) recommendation, which the architects can choose to accept, for a more complete dataset.

Adding KPIs to Diagram-based Enterprise Architecture

◉ Comparison of future vs current state. Architects can dashboard side-by-side comparisons of information or technical architecture designs plus related catalogs and use these and related metrics to monitor transformation projects

◉ Risks associated with specific processes (security ratings and risk ratings can be rolled up from technologies and applications to the processes they support)

◉ Comparing tasks by mapping tasks or processes to capabilities. For instance, as part of a consolidation during a merger or acquisition, architects can calculate costs or technical KPIs on processes to determine efficiency of the two versions of the process.

◉ Dependency analysis of a process: using diagrams and Graph Views to see connections e.g. to highlight where a process is dependent on outdated technology.

◉ Show systems, interfaces and APIs as part of process diagrams

Analysis by Architects on data from APIs

Architects can also present data pulled in from CMBDs or other company data sources (via API queries) as stakeholder dashboards. Charts and interactive visuals are often clearer and easier to explain than lists of data.

◉ Architects can use tools such as Postman to run queries on APIs

◉ Common API integrations include Technopedia a range of CMDBs, and VMware products

We’re in the foothills of a golden age of architectural analysis. Businesses understand their external environment and run their sales functions with data from business intelligence tools and modern sales platforms. They are applying the same quantitative approach to their internal enterprises, a joined-up universe of data on people, processes and technologies.

Source: opengroup.org

Friday, 3 December 2021

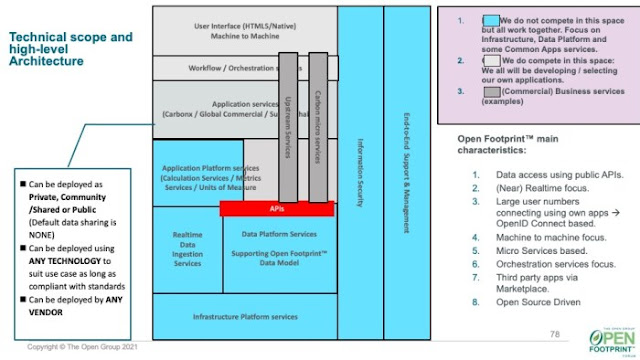

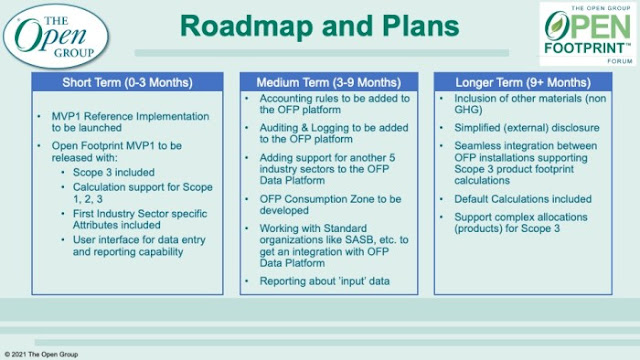

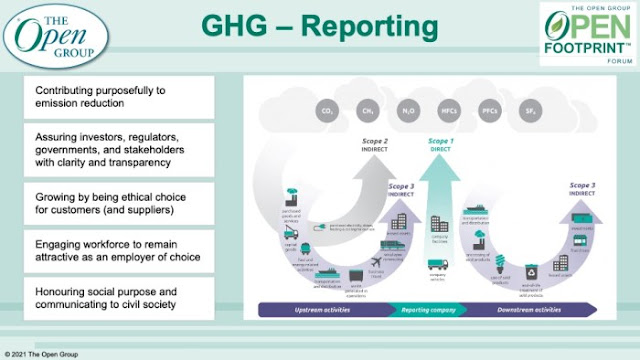

The Open Group Open Footprint™ Forum Global Event Highlights Blog

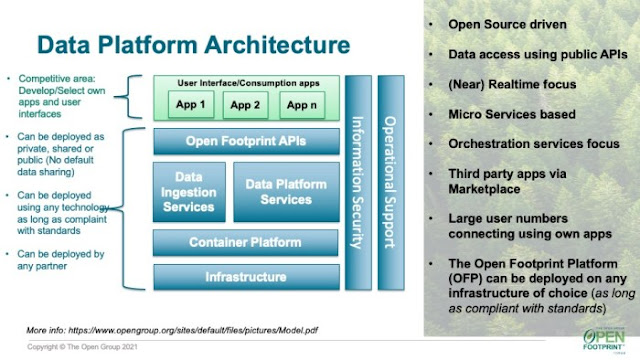

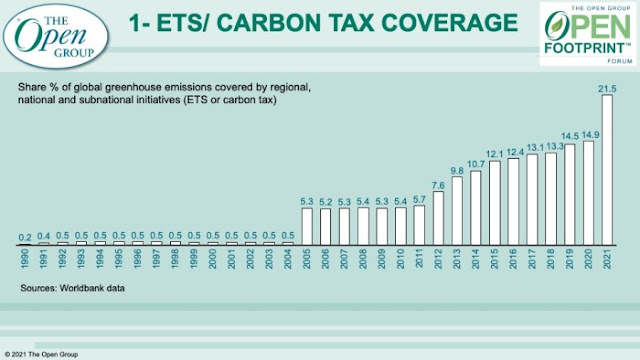

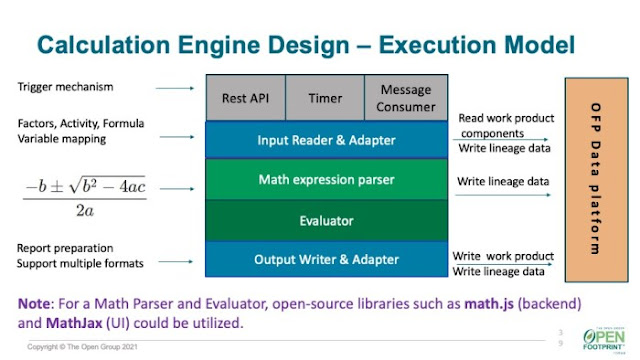

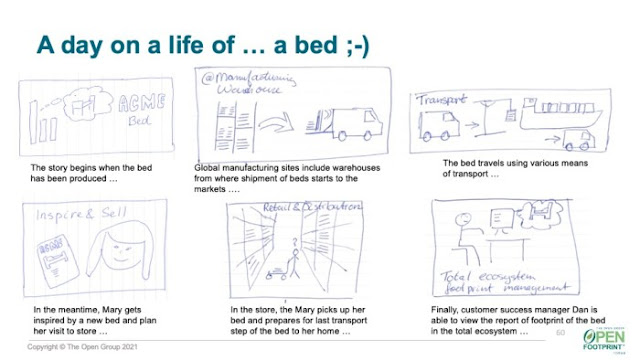

The Open Group Open Footprint™ Forum (OFP) held its first virtual event, June 23-24, 2021. It brought together experts from across the globe to introduce and demonstrate the work that has gone into the Forum since it launched in August 2020. Speakers, from a plethora of industry leading organizations such as Accenture, AWS, Deloitte, ERM, IBM, Infosys, Shell, WBCSD, and Wipro hosted sessions outlining the mission of the Open Footprint Forum, explanations of the Open Footprint Data Platform, as well as live demonstrations of the Platform to show its applicability to all industries.

The Open Group Open Footprint Forum was kicked off by Heidi Karlsson, Director, OFP at The Open Group and Johan Krebbers, VP IT Innovation and GM Emerging Digital Technologies for Shell. Both Heidi and Johan provided an overview and introduction to the Forum, it’s mission and the work that is already taking place. The aim is to create an industry standard (and Open Source-based) data platform where emissions data of each company can be saved using these same industry standards.

Open Footprint Deliverables

Open Footprint Platform and what is expected by various industries

Thursday, 2 December 2021

Agile Enterprise Solution Architecture

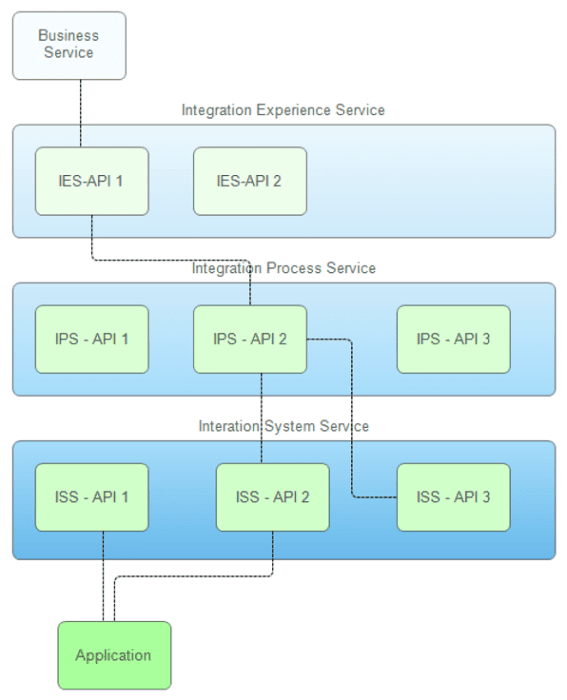

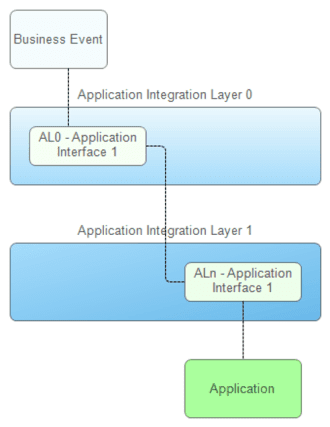

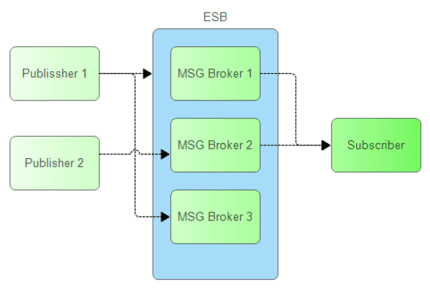

Enterprise Architecture (EA) as an enterprise planning framework has its place and merits, yet it often falls short of real-world IT expectations. The traditional EA approach defines the to-be state architecture that may exist for a while but does not last long. In most cases, an enterprise architect starts from existing architecture and makes improvements. Such improvements are constantly needed as complex systems EA guides and expects are subject to constant changes. In practice, an enterprise architect is either engaged in strategic planning (including business modeling) or solution-oriented architecture. Most of the routine work of enterprise architects, in fact, involves crafting application or technical architecture. Therefore, what really matters in the IT world is an Enterprise Solution Architecture (ESA) that inter-mingles EA with Solution Architecture (SA) and provides holistic yet pragmatic modeling to enterprise information systems, along with an incremental and iterative approach for agility.

Agile Enterprise Solution Architecture mainly covers five areas: 1) enterprise capability (enterprise-wide organizational considerations of strategic planning, business context, and relevancy), 2) requirement/case scenario (business process and functionality required for enterprise IT systems), 3) architecture overview (including traceable business measurements, architecture patterns, and architectural metrics such as principle, risk, architecture decision, etc.), 4) IT functional service (relationship/interaction, granularity, etc.), and 5) IT infrastructure (operational aspect).

Within this architecture, all high-level statements (business initiative, process, and the like) need to be clearly mapped into ESA elements before they can become part of the architecture. Importantly, the IT service serves as a liaison between the enterprise blueprint and solution architecture. Its focus is on different levels of service interaction, service offer, and service system. At the functional level, for example, IT services can be categorized as interaction service, application logic service, data service and technical service.

Agile ESA helps clarify enterprise-level issues and key stakeholders’ major concerns (or the architecturally significant concerns) by forming critical architectural thinking blocks, especially those in between enterprise strategies and capabilities, business domains and applications, and applications and technologies. For this purpose, it:

◉ Maps inputs from all stakeholders including implicit requirements or objectives

◉ Introduces key architectural metrics to reach realistic architecture decisions

◉ Employs techniques for service abstraction, service interface, and service realization

◉ Incorporates an analysis process for architectural modeling assurance and governance

◉ Advocates just enough architecture and significant architecture of value streams

◉ Instills T-shaped IT expertise via a modeling framework to balance the enterprise capabilities and IT service modeling at the right level of abstraction and correlate between different architectural aspects (business and IT, application and technical, functional and operational, logical and physical)

As a modeling framework, Agile Enterprise Solution Architecture targets the following objectives:

1. Simplified: Eliminate unnecessary complexities in a traditional enterprise architecture, and simplify various architectures into a core model for easy learning and wider adoption

2. Panoramic: Take a holistic approach to trace back and forth key architectural elements (business, technical, and alike), and make enterprise architecture readily applicable and pragmatic

3. IT Service-oriented: Focus on enterprise capabilities and IT services (the primary elements), strike a proper balance between enterprise architecture’s strict definitions and solution architecture’s granular details, resulting in IT service architecture delivery (rather than traditional roadmap, business entity, product, or component)

4. Adaptable: Apply to various architectural styles (enterprise or solution, emergent or intentional, cloud-native or monolithic, software or system-scale, business or application) for ready customization and extension, and architect for changes to meet enterprise growth

Source: opengroup.org

Wednesday, 1 December 2021

How to Use Microservices: A Guide for Enterprise Architects

Many organizations have started to break up a portion of their monolith applications and systems, transitioning to sets of smaller, interconnected microservices.

A recent survey by TechRepublic, found that organizations who used microservices were reaping clear benefits: 69% were experiencing faster deployment of services, 61% had greater flexibility to respond to changing conditions, and 56% benefited more from rapidly scaling up new features into large applications.

But when are microservice architectures the best option? When can they offer the most value to an enterprise? And how do they fit in the enterprise architect’s toolbox?

The Benefits of Microservices

Consider a car production assembly line, where task specialization has been introduced to manufacture each part. Individual tasks become more efficient due to dedicated resources, operations, and labor. These newly generated efficiencies drive greater productivity and output, benefiting the overall process.

Similarly, in microservices, or microservice architectures, separate modules are responsible for building and maintaining different components of the end-product. Individual units can be modified and scaled without disrupting the other parts. In contrast, implementing changes to a single, large “monolith” module, can be difficult to manage and can quickly become complicated.

Microservice vs. Monolith Architectures

The O’Reilly Microservices Adoption in 2020 report found that “77% of respondents have adopted microservices, with 92% experiencing success with microservices.”

While the advantages of microservice architectures are plentiful, there are also tradeoffs to consider when comparing to monoliths.

In short, microservices still need to be “architected”:

◉ Communication between separate services is more complex. As large applications can contain dozens of services, managing the interactions between modules securely can add extra challenges.

◉ While microservices allow for different programming languages to be used, the deployment and service discovery process is more complicated. A broader knowledge is needed to understand an application’s full scope.

◉ More services equal more resources. For the car manufacturing example above, each task station requires individual tools, workers, and processes. Likewise, a single service may call for a dedicated database, server, and APIs.

How and when to Migrate a Monolith to a Microservices Architecture

When deciding if a microservice architecture is the right option, enterprise architects need to consider their organization’s goals and concerns.

As it’s uncommon for new architectures to be built from the ground up, a migration from one state to another is the most likely scenario. This transition begs the questions: What does the current architecture allow? What does it limit? What are we trying to achieve?

Sam Newman, author of Building Microservices: Designing Fine-Grained Systems, suggests starting with Domain-driven design or DDD. This modeling exercise enables an organization to “figure out what is happening inside the monolith and to determine the units of work from a business-domain point of view”.

Considerations when Building a Microservice Architecture

Once an enterprise architecture practice has settled on migrating from one architecture to another, the team can ensure the process is heading in the right direction by:

◉ Determining the level of modelling detail;

◉ Deciding on the applied properties, and;

◉ Setting appropriate KPIs.