Based on a request that stemmed from a discussion earlier in the year, I spent three days at a company’s plant to help them finetune their Gemba walks. Several of their floor leaders had attended a training session and approached me with questions. The training highlighted a problem they had been having since they implemented their first safety, quality, delivery, cost (sometimes inventory and/or productivity), morale (SQDCM) board about one year earlier.

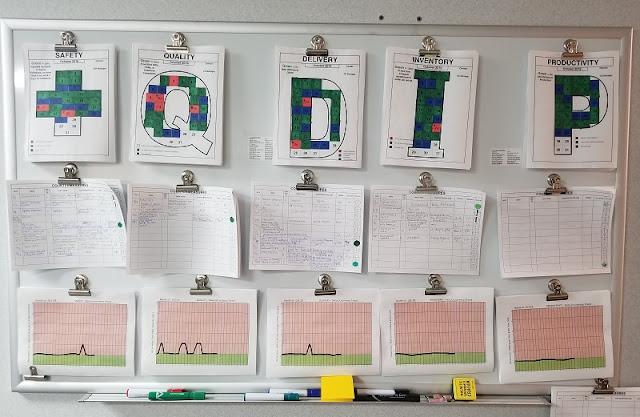

Figure 1: Template of an SQDCM Board

Figure 2: Example of Modified SQDIP Board

Figure 3: Example of Modified SQDIPM Board

Development of a Typical SQDCM Board

Their first SQDCM board described in this example (not shown in photographs) was put in the department occupying the middle of both the physical facility and process flow. They had included an accountability process* at the board as well. As the year went by, they expanded into other process areas within the facility.

The metrics that were chosen were, for better or worse, “handed down” from the corporate office located in another city. Those metrics were used at each board, with little or no modification permitted. This led to difficulty for many of the company’s leaders. One big problem: The daily Gemba walk was conducted in the order the boards were created, not in the logical flow of the process. As with so many misunderstood elements in an attempted Lean transformation, the focus was on not being red, rather than identifying and correcting problems.

What did this specifically look like? Red items on the fancy SQDCM letters were transferred down to a countermeasure (C/M) sheet, but in many instances, no actions were identified. There was even a checkbox for “no C/M needed.” (Hint: If you have a red, it should always have a corresponding countermeasure!) In addition, red situations were glazed over quickly so green situations could be highlighted.

None of this is uncommon. Too many organizations attempting to either do Lean or possibly transform simply don’t understand the concepts and theory behind the numerous tools. SQDCM isn’t simply putting those letters up on a board. Gemba walks aren’t simply management by walking around (MBWA) – popular in the ’80s and ’90s. Strategic deployment isn’t simply an X-matrix in the CEO’s office or, even worse, an annual operating plan.

When organizations truly want to benefit from Lean thinking, the leadership understands this requires a transformation – the entire business needs to change the way it operates. Most importantly, the organization needs to understand that Lean is a holistic system. This is why a tree is one of the most common visualizations of Lean. The roots, the soil, the trunk, the branches, the leaves, the sun and the rain all must work together for the tree to maximize its potential. Simply dropping a seed in the ground doesn’t guarantee success.

Tool Use of SQDCM Boards

So, what should this complete transformation look like? Let’s start with two points of basic tool use of SQDCM boards.

1. Always start at the point closest to the customer. It doesn’t matter how good your manufacturing is if you can’t get product out of shipping. Put your first board there. See how you are performing to your external customer. Then put the boards in place moving backward. That might be warehouse, packing, painting, assembly – whatever the process is that delivers to shipping. Don’t wait months between boards. Push for one every week or two. End with boards in scheduling, purchasing and maintenance. If appropriate, get boards to HR, finance, sales, customer service and R&D too. Just remember – start closest to the customer and work your way backward.

2. Determine the right metrics for the boards. Safety is always first. The metrics are presented in the name in order of priority (safety, quality, delivery, cost [sometimes inventory and/or productivity], morale – although I often move morale ahead of safety, in keeping with Toyota’s respect-for-people mantra). The metrics at the floor need to relate to the floor but also need to tie to company goals.

Floor-Specific Goals

Boards that have the same percentage metric or labor/hour metric across departments aren’t scaled to reflect the specific areas. (I’ve never seen shipping, assembly and machining have identical outcomes.) Pay close attention to the behavior the metric drives. For example, with OSHA total reportable incidence rate (TRIR), it either becomes an impossible goal due to injuries, or employees hide injuries to not affect the goal. Instead, try measuring something like “more than five safety opportunities identified each week.” This is a proactive goal. In theory, the more opportunities identified, the fewer actual injuries occur. Thus, the floor metric is supporting the corporate metric of reduced recordable injuries.

It is important to note that not only do these goals need to be floor-specific (as opposed to company-specific), but they also need to be customer-driven. Each department’s customer is the next department in line. Is the machining department delivering product at spec, on time to assembly? Is shipping delivering to the delivery company in the same way? If the organization can deliver on time internally, corporate delivery performance will be improved. Likewise, each department should be managing inventory. Be careful not to simply manage dollars or pieces. Rather, the inventory goal should be reflected by inventory turns and stock-outs. These two metrics drive each department to control their inventory level and strive to have the right inventory on hand. This helps control cost at the corporate level.

Connection with Strategic Deployment

Now, tie this to strategic deployment (SD). As noted above, the plant SQDCM should connect to the corporate goals. This means there should be deployment of those SQDCM goals from top to bottom.

Figure 4: Connect the Plant SQDCM to the Overall SD

When the X-matrix is created, it should be a three- to five-year plan, with annual achievements. The targets to improve should be related to the stretch goals of the organization (or facility). The plant referred to in the beginning of the article faltered here. They simply took the corporate deployment goals (more like annual operating plan goals) and rolled them into department SQDCM boards. This made the measurement cumbersome, difficult to relate to employees and stifled discussion during the Gemba walks.

Strategic Deployment Is Critical

Early in my career, we started with SQDCM boards without SD. I try not to do this now. For our first 18 months, we struggled with the process. When we initiated SD, it seemed to tie everything together for our managers. It didn’t affect the direct labor employees simply because our initial SQDCM was floor-specific (because there was no strategic deployment). Note: Initial attempts at this process can be time-consuming for an organization. However, the planning becomes both easier and faster with experience.

Here are a couple other tricks for the SD process.

1. Start the process in October. This ensures the organization is ready by January. Remember, a strong SD process has catchball – the two-way process of goal discussion going from executive offices to shop floor.

2. Build a Gantt chart into the SD process. Many first- and second-year SD processes forget about timelines. Plants expect to hit goals in January when the projects aren’t even active until April.

As shown in Figure 5, the goal of the SQDCM board isn’t the pretty green or red letters at the top, but rather the importance of driving problem solving. The focus should be on cause identification and solution implementation, not: are we red or green?

Figure 5: Root Cause Identification and Problem Solutions

Gemba Walk

Next to the Gemba walk! There are many, many ways to conduct the walk process. There can be different tiers, different frequencies of walk and different expectations of leaders. This describes my way. To start, the highest level in the facility should be on the walk daily (what is often identified as the senior staff). Each department in the facility should also be represented (this is often beyond the senior staff). Strong walks are supported by leaders having team huddles to start the shift, before the walk. These are leaders and their hourly employees.

The walk should start closest to the customer, just like board implementation. The walk works back until it ends at receiving (or sometimes sales/customer service/R&D). Green is acknowledged, but red is discussed. What was the problem, what caused it, what temporary countermeasure was used, what is the next step for a long-term C/M? The temporary C/M is often where teams stop in the process (if they determine anything at all). This displays a lack of Lean understanding.

The challenge is to determine the long-term C/M. It is important to now note that the most critical part of the SQDCM board is not the pretty green or red letters, but rather the countermeasure sheet. This shows the actions to get the department (or facility or company) back to green. In addition, the lower information on the board often reflects trends over time. This, too, can be critical, to ensure departments aren’t chasing squirrels and missing the bigger picture. The focus should always be on the ideal state (defined as 1×1, on-demand, on-time, with perfect quality, safely created and delivered, at the best cost).

Source: isixsigma.com